Newsletter Tech

16 February 2021 | 262 min read

📚 Summary

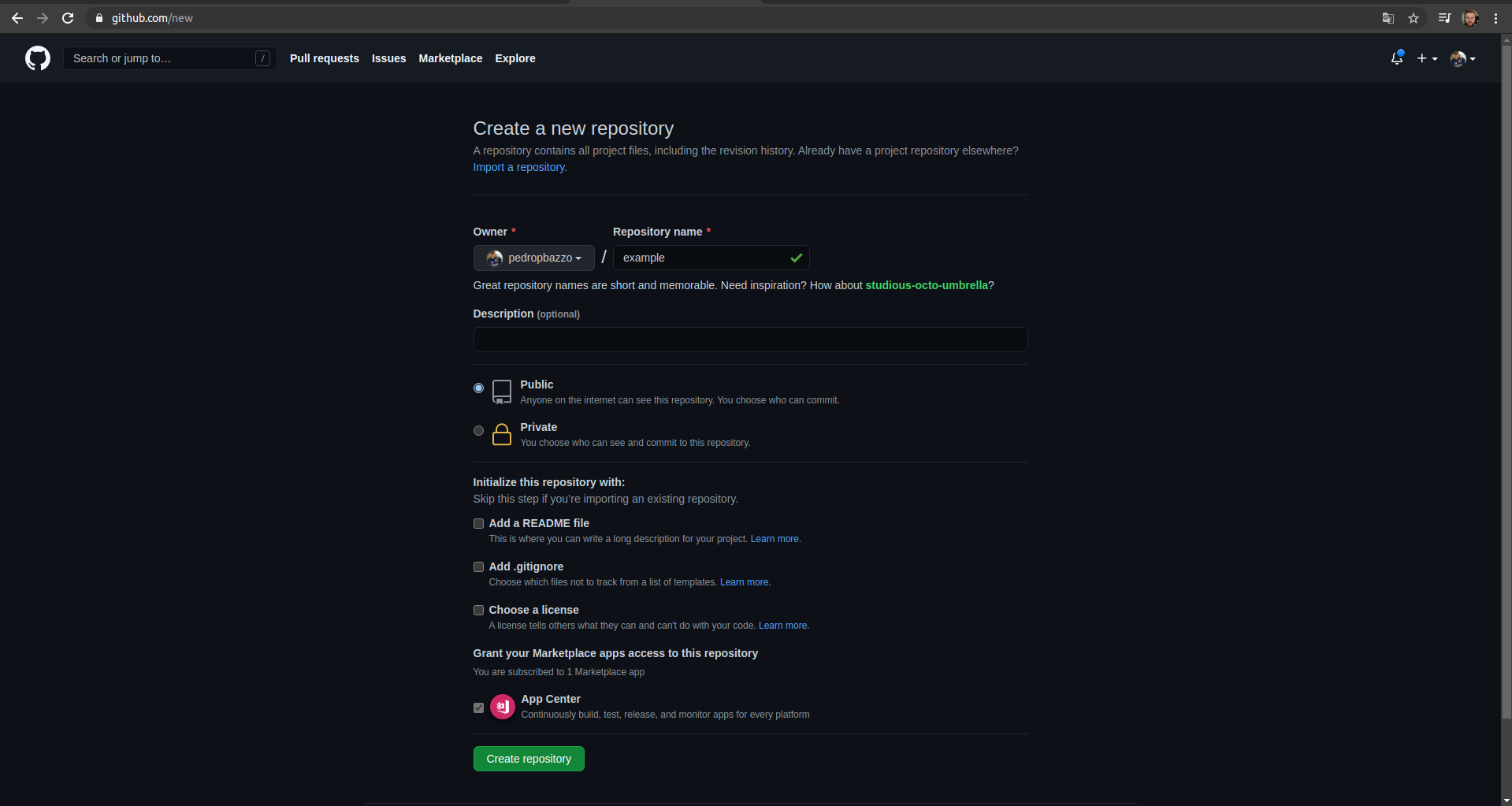

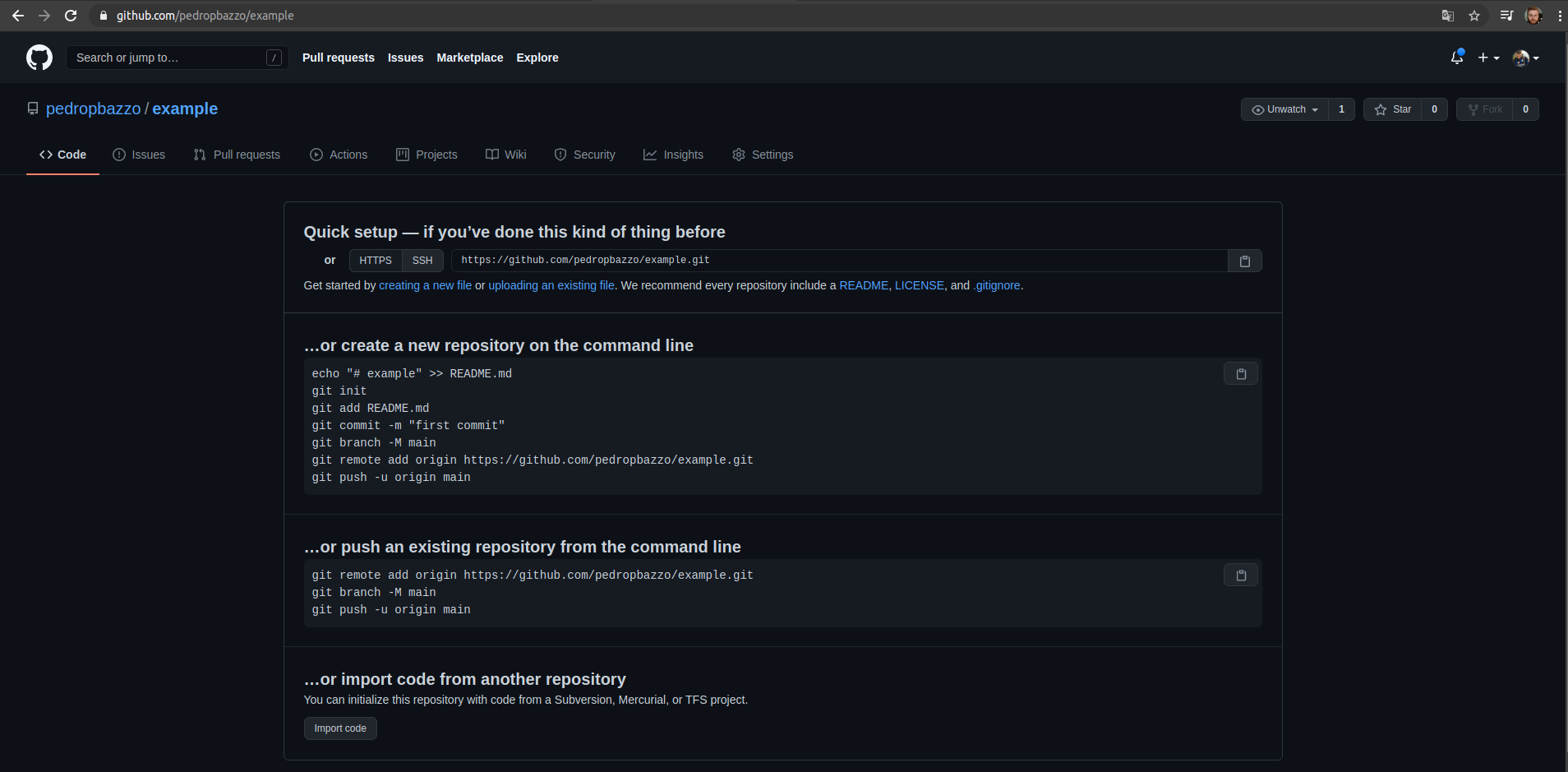

[EN-US] What is Next.js?

🎬 See the full video by clicking here

👩🏼💻 Click here to view the repository

Next.js is a React framework with a focus on production and efficiency created and maintained by the Vercel team, Next seeks to bring together several features such as hybrid and static content rendering, TypeScript support, pre-fetching, route system, feature packs and several plugins and examples to accelerate your development providing a complete structure for you to start your project. Next.js is an open source framework under the MIT license and is being used by several companies with good growth in the market.

With all these pre-configured facilities it is assimilated to a create react app where you start the project very fast and without worrying about webpack settings, folder structures, route configuration and so on.

About Next.js:

Main features of Next.js

Next.js and Server Side Rendering

Mini introductory class on Next.js

How to start a project with Next.js

Indication of plugins and packages

Main features of Next.js

Hybrid SSG and SSR: Render the pages during the build (Static Site Generation) or on each request (Server-side Rendering) in the same project.

Hot Code Reloading: Any changes made to your code during development will be reflected in the local application in real time, updating automatically.

Automatic Routing: The URLs in Next js are mapped based on the pages folder, so any file in this folder becomes a page, without the need for extra configuration. (you can customize this if you need to)

Automatic Code Splitting: This functionality allows pages to be rendered only with the packages they need. Let's say that only one page of your website uses Ant Design, this package will only be linked to this page. This ensures that each page has only the code necessary for its rendering, decreasing the size (kB) of each page and improving the rendering speed. There was a contribution from the Google team to improve this functionality recently.

TypeScript support: Integrated automatic configuration and compilation similar to an IDE.

Internationalization: As a standard, Next.js already has a structure for identifying different languages, working with exclusive routes and translations via locale.

Image Optimization: Next's native component for optimizing your images with resizing, lazyload, images in a modern format and easy to implement.

Next.js and Server Side Rendering

The big difference at the beginning of Next was the possibility of using rendering on the server side (SSR) this solves a problem in applications and websites built with React that mainly need SEO. In some React applications you will find that it is not always efficient to load all content on the client side (client-side) which is the React standard. Currently Next js is able to work in a hybrid way with SSG and SSR.

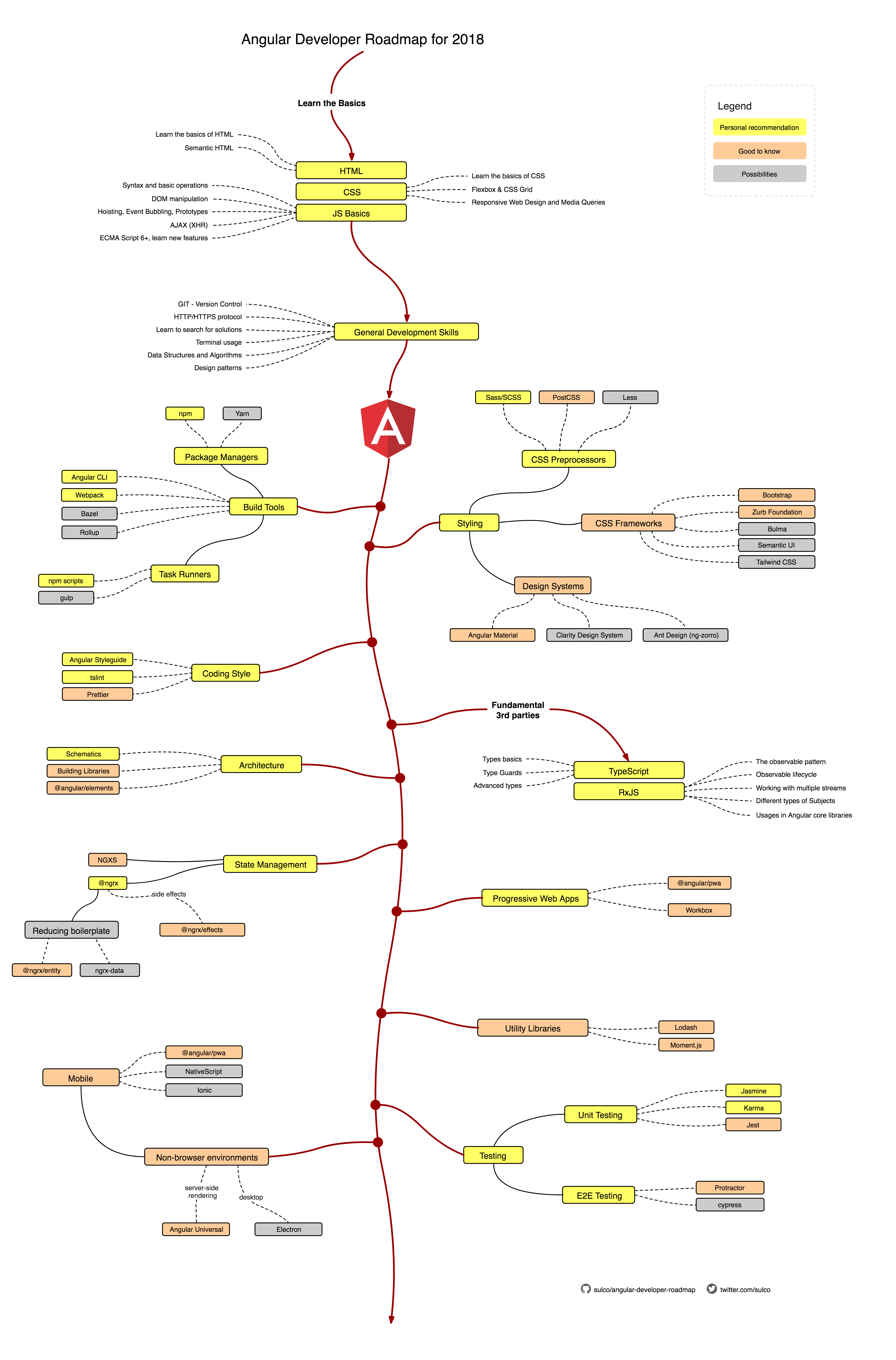

[EN-US] Angular

🎬 See the full video by clicking here

👩🏼💻 Click here to view the repository

Angular is a JavaScript (Typescript) based framework developed by Google for building web applications. Angular was established in 2009 as AngularJS, which used JavaScript as it’s a programming language, but in 2016, Google completely rewrites Angular. Since then, Angular Use Typescript as its language, typescript, is similar to JavaScript, and it is also considered as the superset of JavaScript.

Angular is a front-end Web Framework so we can only make client-side dynamic WebPages using Angular, and Google itself uses this beautiful and powerful framework to build client-side dynamic pages. One of the main features of angular is, we can use angular to build a single page application because it’s based on MVC architecture. Angular supports two-way data binding that requires in real-time application.

Advantages

With angular, we can quickly develop an application.

Support MVC architecture.

Two ways binding a perfect choice for real-time applications

The ideal framework for single-page applications

Fast-growing community

Enhanced design Architecture

Disadvantages

Steep learning curve

Slow processing

Limited SEO Option

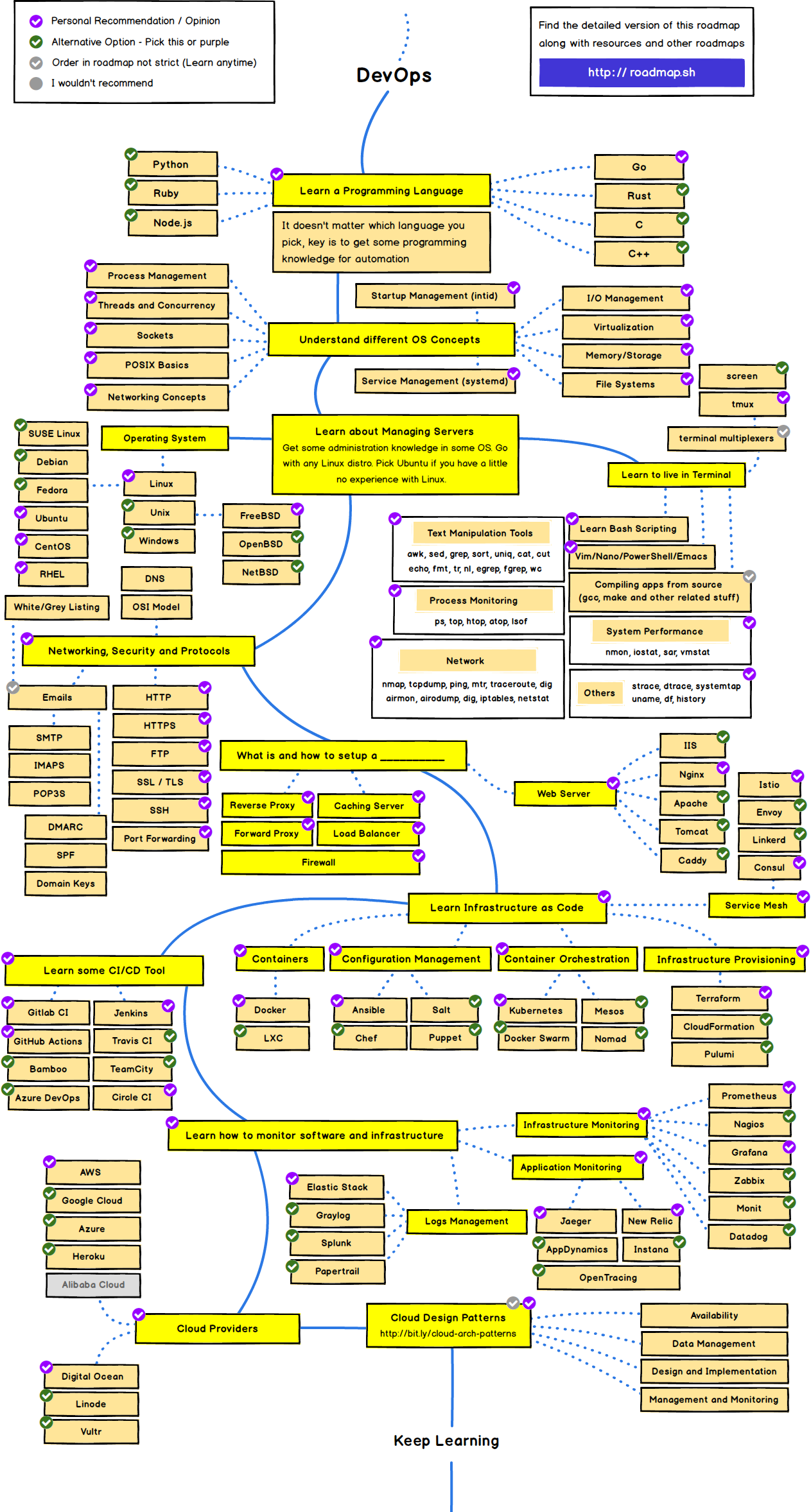

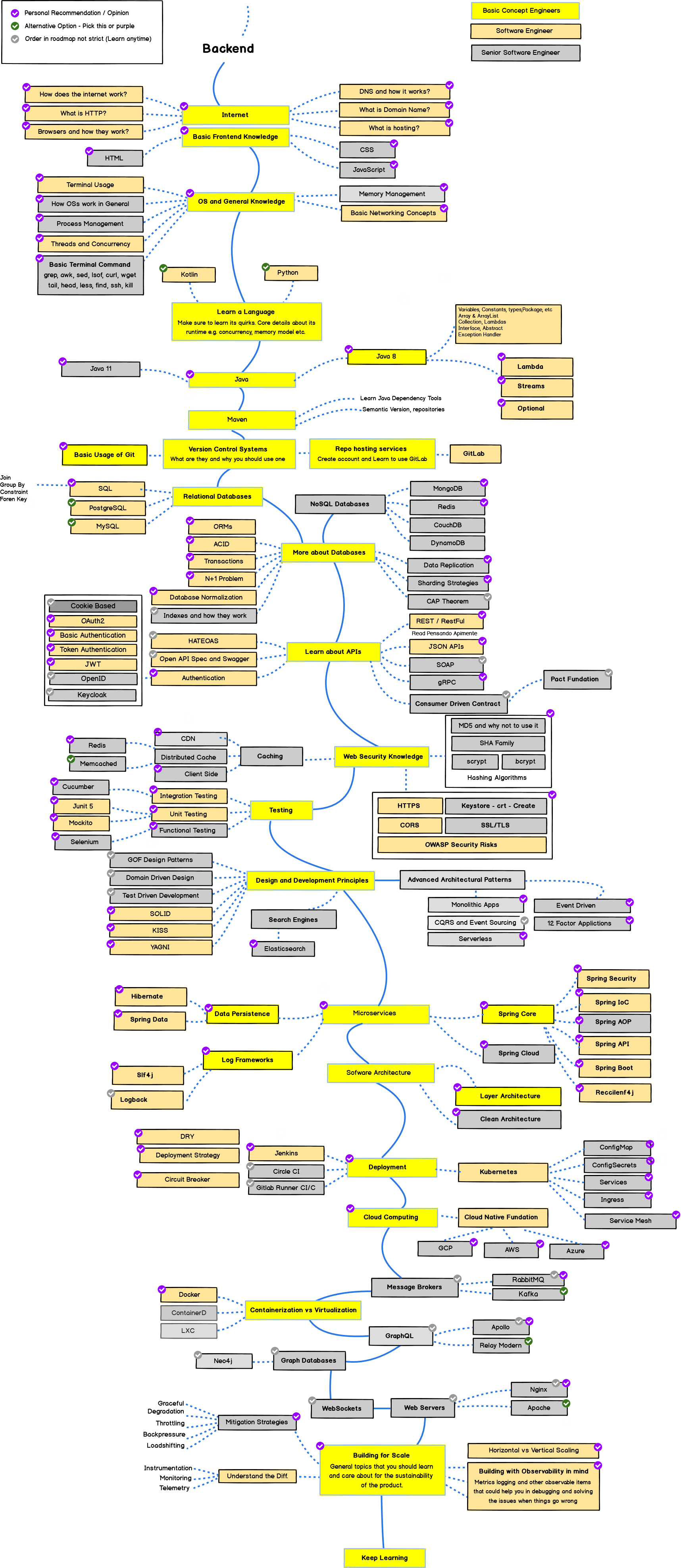

Roadmap

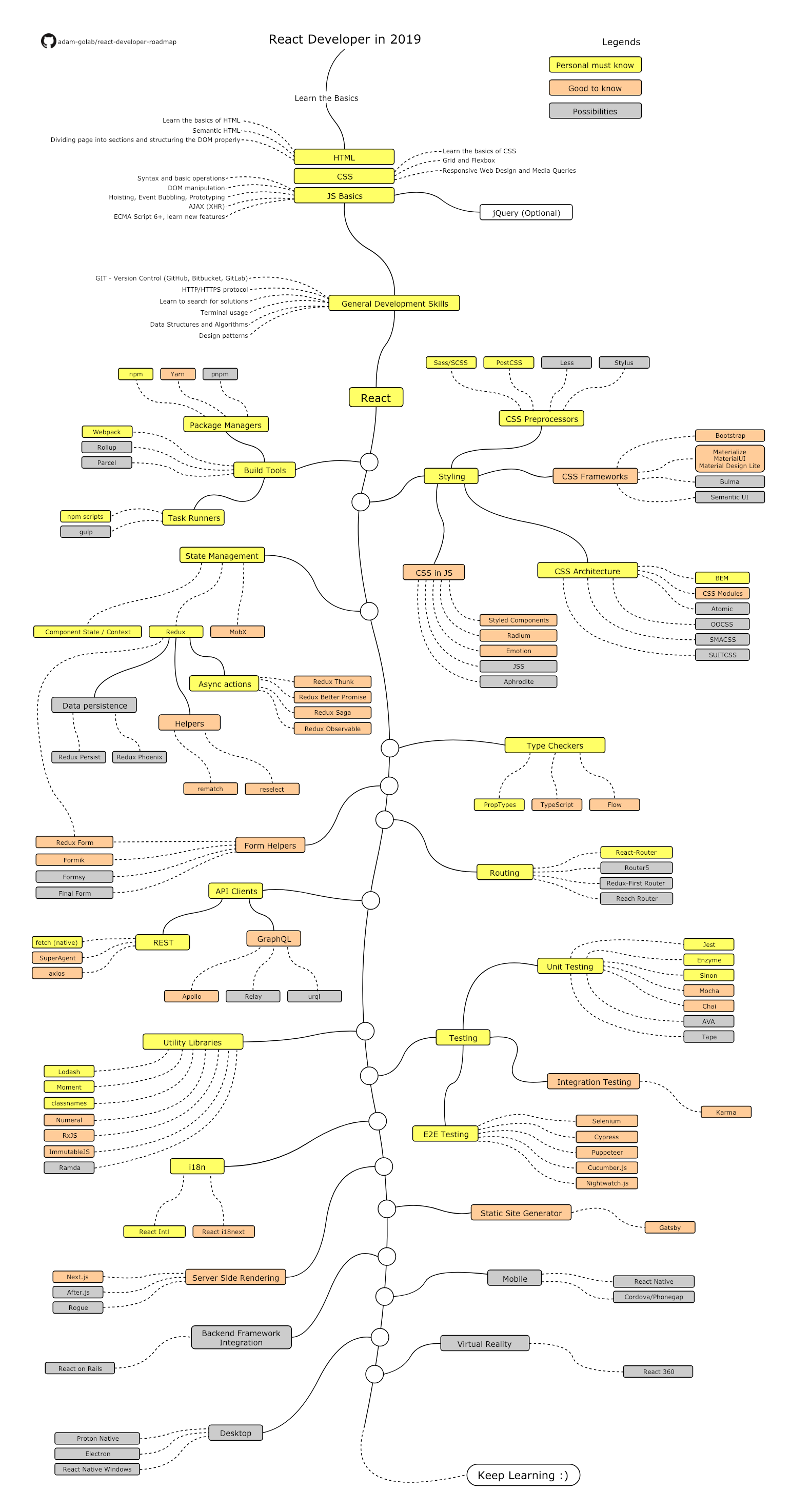

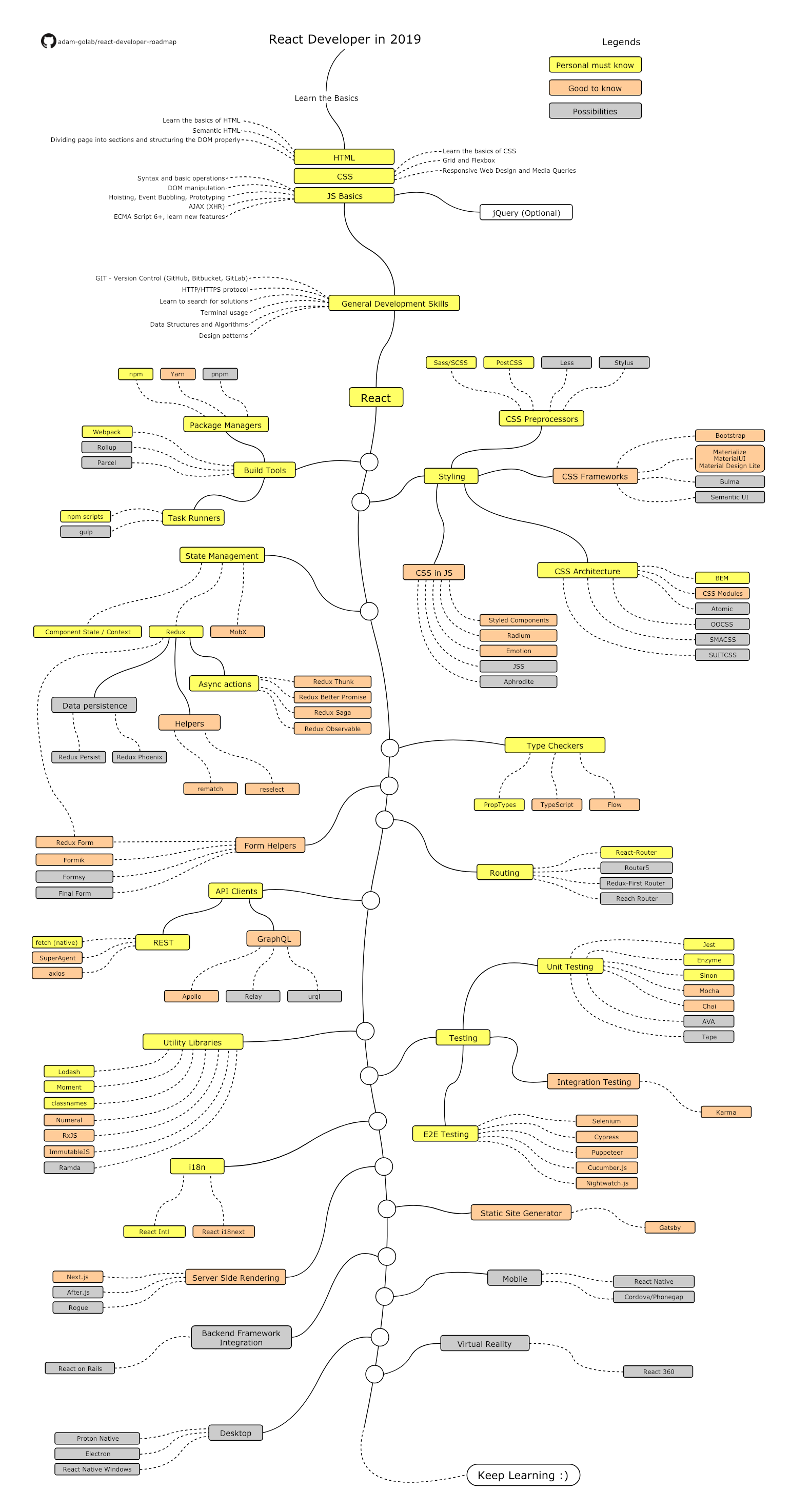

[EN-US] React

🎬 See the full video by clicking here

👩🏼💻 Click here to view the repository

One of the most popular front-end web development frameworks is Reactjs which is developed by Facebook. Though React is not a web development framework but an open-source JavaScript library that is highly used for developing user interfaces.

Using react you can create single page application, and even a mobile app. As React is a library, so it does not support many features like other Front-end frameworks, that’s why to build a proper single page application, React integrates with other libraries for state management, routing, and interaction with API.

Advantages

it’s virtual DOM support fast manipulation in the document.

It can be integrated with other libraries

Support for mobile web-application

Reusable components

Easy to use tools

Disadvantages

Does not have well-organized documentation

Complex structure than JavaScript.

Less focus on UI

[EN-US] Vue

🎬 See the full video by clicking here

👩🏼💻 Click here to view the repository

Vue is another most popular open-source JavaScript framework mainly used for creating a single page application. Vue.js came into existence in February 2014 and is developed by Evan You. This framework uses “high decoupling,” which facilitate developers to create attractive and easy to use user interfaces (UIs). It is based on Model-View-View model (MVVM) architecture.

Advantages

Fast Development

Small size

Easy to maintain and to learn

Can be integrated with other applications

Virtual DOM rendering and performance

Components reusability

Solid tooling ecosystem

Disadvantages

Does not have stable tools

Does not support by tech-giants

Less plug-in and components

[EN-US] GraphQL vs. REST | What are the diferences?

GraphQL refers to a query language and runtime for application programming interfaces, known for providing users with the precise data they are looking for. GraphQL allows developers to create flexible and fast APIs and can be deployed in an integrated development environment known as GraphiQL. GraphQL is widely considered a viable alternative to REST. Developers using GraphQL can create requests capable of fetching data from multiple sources in one API call.

GraphQL users can add or remove fields in an API without making any changes to existing queries. Users can use their chosen API development methods and rely on GraphQL to maintain the desired functionality for customers.

What are the benefits of GraphQL? These are the main advantages of using GraphQL:

Declarative data search GraphQL uses the search for declarative data for its queries. This is advantageous for users, as data can be selected, including its fields and entities, with a single query request on data relationships. GraphQL dictates which fields are required by your UI, functioning in principle as a solution in which the UI is looking for data.

GraphQL queries are efficient in partial data selection for UI operations to retrieve data in a single request. Customers using GraphQL are aware of data requirements, and servers are aware of data structures and requirements for using data from a source.

Solves overfetching GraphQL does not perform any excessive data searching. This is different from a RESTful API configuration in which a client generally extracts data excessively if there is an identical API. In GraphQL, the mobile client has the option of selecting different sets of fields. This allows GraphQL users to search only for the specific information needed for a user query.

GraphQL is versatile GraphQL is more versatile than most developers consider. It is decoupled from the back-end and front-end solutions and has JavaScript reference implementation. It facilitates the use of the server side and the client side in JavaScript libraries, including Express, Angular, Hapi, Koa and Vue and several others. GraphQL is capable of working similarly to the language-independent interface of REST.

What are the disadvantages of GraphQL? These are the main disadvantages of a GraphQL API.

Problems handling complex queries GraphQL queries can create performance-related problems, while allowing customers to execute specific requests. Problems occur if and when a customer requires many fields nested simultaneously. This is one of the reasons why many users choose the REST API when performing complex queries, as it allows them to search for data through various terminals using precise queries. Despite the need for multiple network calls, avoiding GraphQL performance problems can be a safer option.

Not the best option for small applications A smaller application may not require GraphQL, as it can benefit from the simplicity of REST. GraphQL is best suited for use cases where there are many multiple services. REST is also preferable as the best option for establishing connections for resource-oriented applications, as they do not need flexible GraphQL queries.

Cache is complex GraphQL does not use HTTP caching processes that make it easy to store request content. The cache allows users to reduce the amount of traffic to a server, making regularly accessed information more easily available.

Steep learning curve GraphQL is considered by many to be difficult to understand due to its steep learning curve. Users may need to learn the schema definition language to start using GraphQL. It may not be possible for all projects to allocate time and effort to gain familiarity with GraphQL. As a result, they tend to opt for the easiest to understand REST. Understanding GraphQL queries can take considerable time, although there are several useful resources.

What is REST?

REST, which stands for Representational State Transfer, is an architectural style for setting standards between web and computer systems. Facilitates the most natural communication between computer systems. RESTful systems are characterized by their statelessness and the tendency to differentiate server and client concerns. In a REST architecture, users can achieve a client and server independent implementation. This means that the client-side code can be changed without having any effect on server operations. In addition, the server side can be changed without having any effect on client operations.

What are the benefits of REST? Here are the main advantages of a REST API.

REST is scalable REST is a protocol known for its scalability and preferred by many for this reason. It draws a line between the server and the client, allowing for easier sizing of a product.

It is quite portable and flexible REST is also known for its flexibility and portability. Users find it easier to port data from one server to another or make changes to a database at any time. Developers can choose to host front-end and back-end on separate servers, allowing for better management.

Offers more independence As the client and the server are separated in a REST protocol configuration, developers find it much easier to implement developments in different areas of any project independently. In addition, a REST API is able to easily adapt to different platforms and syntax. As a result, developers can make use of different environments during the development phase.

What are the disadvantages of REST? Here are the disadvantages of a REST API.

Client server A limitation of REST stems from the fact that it depends on the concept of server and client separate from each other and can grow independently.

Stateless REST APIs are stateless in nature and allow developers to make independent calls. Each of your calls has all the necessary data for completion.

General requests REST has not been state and this type of API can increase request overheads, since the bulky loads of incoming and outgoing calls are handled. A REST API must be optimized for caching data.

Interface Decoupling a client from the server requires a uniform interface that facilitates independent application evolution without requiring the services, actions, or models of the application, which are included in the API layer.

On-demand code Code on Demand is a feature that allows applets and code transmission through the API to be used in the application.

Layered structure REST APIs have different architectural layers that create a hierarchical structure for modular and scalable applications.

GraphQL vs REST | What are the diferences? HTTP status codes The common status code for each GraphQL request, error or success is 200. It is quite different from the REST APIs, where different status codes point to different responses, that is, 200, 400 and 401 represent success, incorrect and unauthorized request, respectively.

Monitoring Monitoring is more convenient with HTTP status codes and REST APIs. Performing a health check on a given endpoint gives users an idea of the API uptime status. For example, a 200 status code means that an API is running. This is in stark contrast to GraphQL, since the analysis of the response body must be performed using a monitoring tool to detect possible errors that are being returned.

Cache In the REST API, it is possible to cache all server-side GET endpoints with a content delivery network. Endpoints are cached by browsers and marked for regular calls in the future. GraphQL is not compatible with HTTP specifications and is available through a single terminal. As a result, queries are not cached in a manner similar to the REST APIs.

Schema REST APIs do not depend on schema-type systems. On the other hand, GraphQL uses a type system to create API definitions. Fields mapped to types define a schema, which is a classification agreement between client and server.

GraphQL vs REST comparison table

The table below shows the most fundamental differences between GraphQL and REST.

| GraphQL |

|---|

| New |

| Larger apps |

| Customer-oriented |

| Mutation, query |

| No overfetching |

| Schema |

| Single API data fetch |

| Growing |

| Fast performance |

| Rapid development |

| Only with libraries |

| Fewer errors |

| REST |

|---|

| Mature |

| Small and medium apps |

| Server-based architecture |

| CRUD |

| The data is not linked to resources or methods |

| Endpoints |

| Multiple API calls with fixed data |

| More time needed for multiple calls |

| Slow performance |

| Best suited for complex queries |

Conclusion

GraphQL is a query and runtime language for application programming interfaces.

[EN-US] AWS vs. Azure vs. Google: Cloud Comparison

The top three cloud computing providers, AWS, Microsoft Azure and Google Cloud, have strengths and weaknesses that make them ideal for different use cases.

The competition for leadership in public cloud computing is a close race between the giants AWS vs. Azure vs. Google.

Clearly, for infrastructure as a service (IaaS) and platform as a service (PaaS), they maintain a high position among the many companies in this segment.

AWS practically dominates the market. In a 2018 report, Synergy Research Group noted that spending on cloud infrastructure services increased by a surprising 51% compared to the previous year's quarter, noting: “AWS's global market share has remained stable at around 33% in the last twelve months, even with the market rising, almost tripled in size ”.

Meanwhile, Microsoft is particularly strong on SaaS, while Google Cloud is positioned for aggressive growth - and is known for offering discounts.

Amazon Web Services has a range of tools that keep growing. Unparalleled features. However, the cost structure can be confusing.

Microsoft Azure has a cloud infrastructure with exceptional capacity. If you are an enterprise customer, Azure is definitely for you - few companies have a corporate history (and Windows support) like Microsoft.

Google Cloud entered the cloud market later and has no corporate focus. But its technical expertise is deep and its market-leading tools in artificial intelligence, machine learning and data analysis.

AWS vs. Azure vs. Google: general pros and cons Many experts recommend that companies assess their public cloud needs on a case-by-case basis and analyze which one offers the best for their needs. Each major supplier has strengths and weaknesses that make them a good choice for certain projects. Want to see?

Pros and cons of AWS

Amazon's greatest strength is its dominance of the public cloud market. In your Magic Quadrant for Cloud Infrastructure as a Service, Worldwide, Gartner noted: “AWS has been a market share leader in cloud IaaS for over 10 years”.

Part of the reason for its popularity is undoubtedly the enormous scope of its operations. AWS has a huge and growing range of services available, as well as the most comprehensive network of data centers worldwide. The Gartner report summed it up, saying, "AWS is the most mature and enterprise-ready provider, with the deepest resources to manage a large number of users and resources."

Amazon's big weakness is related to cost. Many companies find it difficult to understand the company's cost structure and manage those costs effectively when performing a high volume of workloads in the service.

When to choose AWS AWS is a great choice for analytical and web workloads, even large-scale data center migrations, AWS provides a range of services.

When it comes to computing, AWS provides the widest range of VM types. Currently, AWS also has the highest computing and storage options available on the market. Its wide variety of VM types (136 VM types and over 26 VM families) allows customers to run everything from small workloads on the web to the largest workloads.

For machine learning and AI workloads, AWS also provides the highest settings for GPU-enabled VM types. For workloads requiring one-time rentals for compliance and regulatory reasons, AWS now also provides

Block storage comes with a variety of options, such as dynamic resizing, different disk types (magnetic and SSD). Unlike other CSPs, AWS does not restrict IOPS by volume size. You can provision IOPS for an extra cost even for small disks.

In front of the managed relational database, AWS supports managed databases for MySQL, PostgreSQL, MariaDB, Oracle (SE and EE) and MS SQL (Web and Enterprise editions). In addition, they have their own MySQL and PostgreSQL compatible database, which offers Oracle-like performance for a low investment.

For NoSQL databases, AWS has been offering its DynamoDB product for over half a decade. AWS is an advocate and provides a variety of NoSQL databases created for this purpose. This includes DynamoDB, Neptune and Elasticache.

For network security, AWS has launched managed services to protect against DDoS (AWS Shield) and Web Application Firewall (WAF), along with AWS Inspector, AWS Config and CloudTrail for managing and auditing inventory and policies. GuardDuty provides threat detection.

AWS serves U.S. government workloads in separate US GovCloud regions (CIA and FBI).

Pros and cons of Microsoft Azure Microsoft was late to the cloud market, but took a step forward, essentially adopting local software - Windows Server, Office, SQL Server, Sharepoint, Dynamics Active Directory, .Net and others - and adapting it again for the cloud.

Azure Services

A big reason for Azure's success is the integration with Microsoft applications / software. Since Azure is fully integrated with these other applications, companies that use a lot of Microsoft software often find that it also makes sense to use Azure.

When to choose Azure Azure is a cloud platform of great importance on the market with a variety of features, which can be a preferred platform for customers who are already using Microsoft products. Although Azure supports several services based on open source products, Microsoft's cloud portfolio is what sets it apart from customers.

Azure has more than 151 types of VMs and 26 families that support everything from small workloads to HPC, Oracle and SAP workloads. Azure has Windows and several types of Linux (RHEL, CentOS, SUSE, Ubuntu). Azure has a separate family of instances for ML / AI workloads.

If you need to run next-generation workloads that require up to 128 vCPU and 3.5 TB memory, Azure can do it. If you have existing licenses for Windows OS, MS-SQL and bring them to the cloud (BYOL) through the Microsoft License Mobility Program, Azure is the option.

Azure was also the first cloud player to recognize the hybrid cloud trend. Azure also provided support for hybrid storage devices like StorSimple, which was unique in the public cloud space.

If you have a data center with predominantly Microsoft workloads and need to migrate on a large scale to the cloud, taking advantage of the well-known tools, Azure provides tools and services, such as Azure Site Recovery.

When it comes to SQL and NoSQL databases, Azure has a very complete set of services. It provides MS SQL Server and Managed SQL Datawarehouse. Azure also provides managed databases for MySQL, PostgreSQL and MariaDB.

It provides an API compatible with MongoDB, Cassandra, Gremlin (Graph) and Azure Table Storage. If you need to run multiple managed data models, including document data models, graphs, key-values, tables and column families in a single cloud, Cosmos may be the best option.

In addition to the pay-per-use credit card billing model and other billing modes, customers with existing corporate accounts can purchase pre-subscriptions to Azure as part of their annual renewals. This is useful for customers who want to budget annual cloud spending in advance. Avoiding uncertainty and additional mid-year budget approvals.

Mobility of cloud licenses for Microsoft products is also relatively easy for customers with multiple Microsoft products running on-premises.

Pros and cons of Google Cloud Platform

The Google Cloud Platform (GCP), despite being late in the game and with the lowest market share of public cloud providers, is showing growth in recent years.

Google Cloud Platform Services

It has several features that put you ahead of your competitors in certain areas. GCP is also catching on, not only with new customers who are already part of the ecosystem, but also the first cloud users who want to expand their scenario to Google as part of a multi-cloud strategy. Google also started with PaaS services, but has been constantly expanding its product portfolio.

When to choose GCP From a computing point of view, Google has the least number of VM sizes (28 instance types in 4 categories). However, it does have a feature that makes these numbers a little irrelevant.

Google allows users to create their own custom sizes (CPU, memory) so that customers can match the size of workloads in the cloud with the size on site. Billing is also based on the total CPU and memory used, rather than individual VMs. This reduces wasted unused capacity.

Another unique feature is that GCP allows almost all instance types to connect GPUs. This can turn any standard or custom instance into an ML-ready VM. Google was also a leader in billing per second, which forced other CSPs to follow suit. Compared to the usual hourly billing standard, billing per second greatly reduces any waste of capacity. This results in savings of up to 40% overall.

Google also linked or purchased third-party cloud migration tools. These tools, such as CloudEndure, Velostrata and CloudPhysics, help customers evaluate, plan and live migrate their VMs to GCP.

Network is the highlight of GCP. They have a low latency global network. Even from the customer's perspective, a VPC network spans all of its regions. Other CSPs limit VPC networks to one region. This makes it easier for GCP customers to create applications that serve customers globally, without creating complex infrastructure design mechanisms across regions and data replication.

For NoSQL Banks, GCP has a product called BigTable. BigTable is a NoSQL database managed on a petabyte scale, used by Google in its own products, such as Gmail.

From a billing point of view, Google offers automatic discounts, such as sustained usage discounts, which lower the price on demand if a VM runs more than a certain number of hours in a month. If you want the most economical cloud provider, GCP is a great option.

Conclusion Each provider has features and advantages that meet specific customer needs. While all cloud providers will continue to provide certain common services (such as a managed MySQL database), each CSP will create differentiated and exclusive services to address very specific customer needs.

From the customer's perspective, these services will also become a way to adopt a multi-cloud strategy. As an example, a customer may want to use GCP for an application that needs Spanner capabilities, while using AWS for their AI services and Azure for specific Windows workloads.

The trend is to get customers to combine resources and providers to arrive with a high availability and operational capacity solution.

[EN-US] Cybersecurity Statistics, Predictions, and Solutions for 2021

In 2020, Cybersecurity has become more important than ever for businesses all over the world. Following various statistics published across the media, we can clearly see that no one is immune against cyber-attacks: major players investing massively in their companies’ cybersecurity, small businesses, and individuals.

Covid-19 pandemic definitely left a huge impact on the overall cybersecurity situation. First, the global lockdown forced many companies to shift to remote work. Cybercriminals took advantage of vulnerable home networks. Many organizations encountered data breaches at the beginning of the work-from-home shift. For instance: 80% of companies reported an increase in cyberattacks in 2020.

Most of the malware was received from email (94% of cases) At the beginning of April 2020, Google reported it was blocking every day 18 million malware e-mails related to COVID-19. Between January and April 2020, the attacks on cloud services increased by 630% Apparently, the healthcare and financial industries were the most affected ones, as they deal with huge amounts of personal data. For instance, various researches show that: In 2020, 27% of all cyberattacks targeted healthcare and financial sectors.

From the beginning of February to the end of April 2020, attacks against the banks rose by 238% (when COVID-19 have started spreading). Most of the financial institutions (82%) reported that it is more and more difficult to fight against cybercriminals, as they become more and more sophisticated. Even though the businesses are trying to adapt to the growing threats coming from cybercriminals, cybersecurity specialists are not optimistic at all, as their researches show that cybercriminals are changing their way of acting even more, and are not planning to slow down. Here are just some figures and predictions for 2021, presented by

Cybersecurity Ventures: By 2021, Cybercrime is expected to cost the world $6.1 trillion annually (more than twice compared to 2015), making it the world’s third-largest economy, after the USA and China.

The cybersecurity experts predict a cyberattack incident to happen every 11 seconds in 2021 (4 times more than in 2016) In 2021, 1st place in the nomination “The Fastest Growing Kind Of Cyber-crime” will go to Ransomware. As the worldwide costs caused by such kind of damages will reach $20 billion (57 times more than in 2015). Taking into account all the mentioned statistics and predictions, it is obvious that organizations and individuals must rethink completely their cybersecurity approaches and strategies.

So what can we all do to resist the cybercrimes more effectively?

Empower your Employees…with Knowledge It has been proved that 90% of cyber-attacks are related to human errors. Often, people take cybersecurity for granted, and most of the employees are not even aware of cyber-attack types and risks…until it is too late. Any employee, who is not well-informed about cybersecurity, can unwillingly fall victim to cyber-attacks, placing your company and clients at risk. That’s why it is crucial to educate the employees, especially today when many of them are working from home. So start spreading cybersecurity awareness right now: provide your employees with all the necessary information concerning cyber threats and bad consequences caused by those; organize cybersecurity training sessions and phishing experiments. Stay in control of the process: make your employees use only secure software and strong passwords, explain to them why they should get the approval of the IT department before installing any software, and why they might have limited access to some data, in some cases.

Protect Proactively

Preventing any damage is always better than repairing it. Cyber-criminals will constantly search the weak points in your company’s cybersecurity infrastructure, that is why you always have to be ahead of them, detecting an attack before it happens. This way of thinking will help you to reduce the damage and avoid major problems. Take all the necessary precautions to ensure your data is protected.

Any Backup Plans?

Researches show that many companies didn’t think about any back-up plans and tactics in the case the attackers have succeeded to steal the data. Again, educate your employees: everyone should be aware of his own responsibilities in all the possible scenarios Constantly control and monitor the entire data stored and shared inside and outside your company’s network. Even though the attacks on cloud storage have increased drastically, never forget to back up your entire content. But how to make sure that the data stored on your computers and cloud services is really protected…even if it was stolen? The answer is simple. Make it useless for the thieves!

Apparently, as we have seen, following all the figures listed above, your data is ultra-protected not when it cannot be breached (because it always can), but when it cannot be read by unauthorized users. Today there are various new technologies that render data useless to unauthorized users and protect your data no matter where it is stored.

For example, Cybervore offers a patented breakthrough technology, which combines authentication, AES 265 encryption, and fragmentation. It is a cybersecurity software called Fragglestorm™ :

https://www.cybervore.com/fragglestorm

A secure method where data is encrypted, sliced, or split into a defined number of fragments that are replicated, and only the authorized user has access. This offers a way to significantly increase data protection and integrity, and ensure a user’s data privacy across any on-premise device and cloud storage service.

Meta-Lists

- atariarchives.org atariarchives.org makes books, information, and software for Atari and other classic computers available on the Web.

- Bento

- Bitsavers.org

- Bookboon: IT & Programming Cookie required. (email address requested, not required)

- conceptf1.blogspot.com

- Free Smalltalk Books, collected by Stéphane Ducasse

- Free Tech Books

- Goalkicker - Programming Notes for Professionals books

- IBM Redbooks

- InfoQ Minibooks

- InTech: Computer and Information Science

- JSBooks - directory of free javascript ebooks

- Learn X in Y minutes

- Learneroo Resources to Learn Programming

- Microsoft Guides to Software

- Microsoft Press: Free E-Books

- Microsoft Technologies 1, including books on Windows Azure, SharePoint, Visual Studio Guide, Windows phone development, ASP.net, Office365, etc. collection by Eric Ligman

- Microsoft Technologies 2, including books on Windows Azure, SharePoint, Visual Studio Guide, Windows phone development, ASP.net, etc. collection by Eric Ligman

- O'Reilly's Open Books Project

- Papers we love

- Programming - Wikibooks

- Red Gate Books

- Stef's Free Online Smalltalk Books

- TechBeamers.com

- TechBooksForFree.com

- The Definitive C++ Book Guide and List

- Theassayer.org

- tutorialspoint

Graphics Programming

- 3D Game Shaders For Beginners - David Lettier (Git) (HTML)

- Blender 3D: Noob to Pro - Wikibooks

- Blender Manual

- Computer Graphics from scratch - Gabriel Gambetta (:construction: in process)

- DirectX manual (draft)

- GPU Gems

- Graphics Programming Black Book - Michael Abrash

- Introduction to Modern OpenGL - Alexander Overvoorde (HTML, EPUB, PDF) (C++)

- Introduction to TouchDesigner 099 (Leanpub account or valid email requested)

- Learn Computer Graphics From Scratch! - Scratchapixel (:construction: in process)

- Learn OpenGL - Joey de Vries

- Learn OpenGL RS - Benjamin Wasty et al. (:construction: in process) (Rust)

- Learning Modern 3D Graphics Programming - Jason L. McKesson (draft)

- Notes for a Computer Graphics Programming Course - Dr. Steve Cunningham (PDF)

- OpenGL - Concepts and illustrations.

- ShaderX series - Wolfgang Engel

- Tutorials for modern OpenGL

- WebGL Insights - Patrick Cozzi and Contributors

Vulkan

- Vulkan Tutorial - Alexander Overvoorde (EPUB, HTML, PDF) (C++)

- Vulkan Tutorial Java - Cristian Herrera et al (Java)

- Vulkan Tutorial RS - Benjamin Wasty et al. (:construction: in process) (Rust)

- Vulkano - Tomaka et al. (HTML) (Rust)

Graphical User Interfaces

- Programming with gtkmm 4

- Search User Interfaces - Marti A. Hearst

- The GLib/GTK+ Development Platform - Sébastien Wilmet (PDF)

GraphQL

Language Agnostic

Algorithms & Data Structures

- A Field Guide To Genetic Programming - Riccardo Poli et al. (PDF)

- Algorithm Design - Jon Kleinberg and Éva Tardos (PDF)

- Algorithmic Graph Theory

- Algorithms - Wikibooks

- Algorithms, 4th Edition - Robert Sedgewick and Kevin Wayne

- Algorithms and Automatic Computing Machines (1963) - B. A. Trakhtenbrot

- Algorithms and Complexity - Herbert S. Wilf (PDF)

- Algorithms Course Materials - Jeff Erickson

- Algorithms Notes for Professionals - Compiled from StackOverflow Documentation (PDF)

- Analysis and Design of Algorithms - Sandeep Sen, IIT Delhi

- Animated Algorithm and Data Structure Visualization (Resource)

- Annotated Algorithms in Python: Applications in Physics, Biology, and Finance - Massimo di Pierro

- Binary Trees (PDF)

- Data Structures - Prof. Subhashis Banerjee, IIT Delhi

- Data Structures (Into Java) - Paul N. Hilfinger (PDF)

- Data Structures and Algorithms: Annotated Reference with Examples - G. Barnett and L. Del Tongo

- Data Structures Succinctly Part 1, Syncfusion (PDF, Kindle) (email address requested, not required)

- Data Structures Succinctly Part 2, Syncfusion (PDF, Kindle) (email address requested, not required)

- Elementary Algorithms - Larry LIU Xinyu

- Foundations of Computer Science - Al Aho and Jeff Ullman

- Geometry Algorithms - Dan Sunday

- Lectures Notes on Algorithm Analysis and Computational Complexity (Fourth Edition) - Ian Parberry (use form at bottom of license)

- LEDA: A Platform for Combinatorial and Geometric Computing - K. Mehlhorn et al.

- Linked List Basics (PDF)

- Linked List Problems (PDF)

- Matters Computational: Ideas, Algorithms, Source Code (PDF)

- Open Data Structures: An Introduction - Pat Morin

- Planning Algorithms

- Problems on Algorithms (Second Edition) - Ian Parberry (use form at bottom of license)

- Purely Functional Data Structures (1996) - Chris Okasaki (PDF)

- Sequential and parallel sorting algorithms

- Text Algorithms (PDF)

- The Algorithm Design Manual

- The Art of Computer Programming - Donald Knuth (fascicles, mostly volume 4)

- The Design of Approximation Algorithms (PDF)

- The Great Tree List Recursion Problem (PDF)

- The Kademlia Protocol Succinctly - Marc Clifton

- Think Complexity - Allen B. Downey (2nd Edition) (PDF, HTML)

Artificial Intelligence

- Introduction to Autonomous Robots - Nikolaus Correll (PDF)

- The Quest for Artificial Intelligence: A History of Ideas and Achievements - Nils J. Nilsson (PDF)

Cellular Automata

- A New Kind of Science - Stephen Wolfram

Cloud Computing

- Azure for Architects, Third Edition (PDF) (email address or account required)

- Azure Functions Succinctly, Syncfusion (PDF, Kindle) (email address requested, not required)

- Cloud Computing for Science and Engineering - Ian Foster, Dennis B. Gannon (:construction: in process)

- Cloud Design Patterns

- Designing Distributed Systems (account required)

- Learn Azure in a Month of Lunches - Iain Foulds (PDF)

- Monitoring Modern Infrastructure (account required)

- Multi-tenant Applications for the Cloud, 3rd Edition

- OpenStack Operations Guide

- Streamline microservice management with Istio Service Mesh (account required)

- The Developer’s Guide to Azure

Competitive Programming

- Competitive Programmer's Handbook - Antti Laaksonen (PDF)

- Competitive Programming, 1st Edition - Steven Halim (PDF)

- Competitive Programming, 2nd Edition - Steven Halim (PDF)

- Principles of Algorithmic Problem Solving - Johan Sannemo (PDF)

Compiler Design

- An Introduction to GCC - Brian Gough

- Basics of Compiler Design (Anniversary Edition) - Torben Mogensen

- Compiler Design in C (1990) - Allen Holub, Prentice Hall

- Compiler Design: Theory, Tools, and Examples, C/C++ Edition - Seth D. Bergmann

- Compiler Design: Theory, Tools, and Examples, Java Edition - Seth D. Bergmann

- Compiling Scala for the Java Virtual Machine - Michel Schinz (PDF)

- Compiling Techniques (1969) - F.R.A. Hopgood, Macdonald

- Crafting Interpreters (:construction:) - Bob Nystrom (HTML)

- Implementing Functional Languages: A Tutorial - Simon Peyton Jones, David Lester

- Let's Build a Compiler (PDF)

- Practical and Theoretical Aspects of Compiler Construction (class lectures and slides)

- The ANTLR Mega Tutorial

Computer Science

- Computational Thinking - Jeannette Wing, Carnegie-Mellon University (PDF)

- Computer Science I - Draft - Dr. Chris Bourke (PDF) (:construction: in process)

- Computer Science II - Draft - Dr. Chris Bourke (PDF) (:construction: in process)

- CS Principles: Big Ideas in Programming - Mark Guzdial and Barbara Ericson (HTML)

Computer Vision

- Computer Vision - Dana Ballard, Chris Brown

- Computer Vision: Algorithms and Applications - Richard Szeliski

- Computer Vision: Models, Learning, and Inference - Simon J.D. Prince

- Programming Computer Vision with Python - Jan Erik Solem

Containers

- Docker Jumpstart - Andrew Odewahn

Database

- Database Design, 2nd Ed. - Adrienne Watt, Nelson Eng (HTML, PDF, EPUB, Kindle)

- Database Explorations (PDF)

- Database Fundamentals (PDF)

- Databases, Types, and The Relational Model: The Third Manifesto (PDF)

- Foundations of Databases

- Readings in Database Systems, 5th Ed.

- Temporal Database Management - Christian S. Jensen

- The Theory of Relational Databases

Datamining

- A Programmer's Guide to Data Mining - Ron Zacharski (Draft)

- Data Jujitsu: The Art of Turning Data into Product (email address requested, not required)

- Data Mining Algorithms In R - Wikibooks

- Internet Advertising: An Interplay among Advertisers, Online Publishers, Ad Exchanges and Web Users (PDF)

- Introduction to Data Science - Jeffrey Stanton

- Introduction to Data Science - Rafael A Irizarry (Leanpub account or valid email requested)

- Mining of Massive Datasets

- School of Data Handbook

- Statistical inference for data science - Brian Caffo

- The Ultimate Guide to 12 Dimensionality Reduction Techniques (with Python codes) - Pulkit Sharma

- Theory and Applications for Advanced Text Mining

Information Retrieval

Licensing

- Creative Commons: a user guide - Simone Aliprandi

- Open Source Licensing Software Freedom and Intellectual Property Law - Lawrence Rosen

- The Public Domain: Enclosing the Commons of the Mind - James Boyle

Machine Learning

- A Brief Introduction to Machine Learning for Engineers - Osvaldo Simeone (PDF)

- A Brief Introduction to Neural Networks

- A Comprehensive Guide to Machine Learning - Soroush Nasiriany, Garrett Thomas, William Wang, Alex Yang (PDF)

- A Course in Machine Learning (PDF)

- A First Encounter with Machine Learning (PDF)

- A Selective Overview of Deep Learning - Fan, Ma, and Zhong (PDF)

- Algorithms for Reinforcement Learning - Csaba Szepesvári (PDF)

- An Introduction to Statistical Learning - Gareth James, Daniela Witten, Trevor Hastie and Robert Tibshirani

- Bayesian Reasoning and Machine Learning

- Deep Learning - Ian Goodfellow, Yoshua Bengio and Aaron Courville

- Deep Learning for Coders with Fastai and PyTorch - Jeremy Howard, Sylvain Gugger (Jupyter Notebooks)

- Deep Learning with PyTorch - Eli Stevens, Luca Antiga, Thomas Viehmann (PDF)

- Dive into Deep Learning

- Explorations in Parallel Distributed Processing: A Handbook of Models, Programs, and Exercises - James L. McClelland

- Foundations of Machine Learning, Second Edition - Mehryar Mohri, Afshin Rostamizadeh, Ameet Talwalkar

- Free and Open Machine Learning - Maikel Mardjan

- Gaussian Processes for Machine Learning

- IBM Machine Learning for Dummies - Judith Hurwitz, Daniel Kirsch

- Information Theory, Inference, and Learning Algorithms

- Interpretable Machine Learning - Christoph Molnar

- Introduction to CNTK Succinctly - James McCaffrey

- Introduction to Machine Learning - Amnon Shashua

- Keras Succinctly - James McCaffrey

- Learn Tensorflow - Jupyter Notebooks

- Learning Deep Architectures for AI (PDF)

- Machine Learning

- Machine Learning for Data Streams - Albert Bifet, Ricard Gavaldà, Geoff Holmes, Bernhard Pfahringer

- Machine Learning from Scratch - Danny Friedman

- Machine Learning, Neural and Statistical Classification

- Mathematics for Machine Learning - Garrett Thomas (PDF)

- Mathematics for Machine Learning - Marc Peter Deisenroth, A Aldo Faisal, and Cheng Soon Ong

- Neural Networks and Deep Learning

- Probabilistic Models in the Study of Language (Draft, with R code)

- Python Machine Learning Projects - Lisa Tagliaferri and Brian Boucheron (PDF, EPUB, MOBI)

- Reinforcement Learning: An Introduction - Richard S. Sutton, Andrew G. Barto (PDF)

- Speech and Language Processing (3rd Edition Draft) - Daniel Jurafsky, James H. Martin (PDF)

- The Elements of Statistical Learning - Trevor Hastie, Robert Tibshirani, and Jerome Friedman

- The LION Way: Machine Learning plus Intelligent Optimization - Roberto Battiti, Mauro Brunato (PDF)

- The Python Game Book

- Top 10 Machine Learning Algorithms Every Engineer Should Know - Binny Mathews and Omair Aasim

- Understanding Machine Learning: From Theory to Algorithms - Shai Shalev-Shwartz, Shai Ben-David

Mathematics

- A Computational Introduction to Number Theory and Algebra - Victor Shoup

- A First Course in Complex Analysis - Matthias Beck, Gerald Marchesi, Dennis Pixton, Lucas Sabalka

- A First Course in Linear Algebra - Rob Beezer

- A Friendly Introduction to Mathematical Logic - Christopher C. Leary and Lars Kristiansen

- A Gentle Introduction to the Art of Mathematics - Joseph E. Fields

- Abstract Algebra: Theory and Applications - Tom Judson

- Active Calculus - Matt Boelkins

- Advanced Algebra - Anthony W. Knapp (PDF)

- Algebra: Abstract and Concrete - Frederick Goodman

- Algebra: An Elementary Text-Book, Part I (1904) - G. Chrystal (PDF)

- Algebra: An Elementary Text-Book, Part II (1900) - G. Chrystal (PDF)

- Algebraic Topology - Allen Hatcher (PDF)

- An Introduction to the Theory of Numbers - Leo Moser (PDF)

- Analytic Geometry (1922) - Lewis Parker Siceloff, George Wentworth, David Eugene Smith (PDF)

- APEX Calculus - Gregory Hartman, Brian Heinold, Troy Siemers, and Dimplekumar Chalishajar

- Applied Combinatorics - Mitchel T. Keller and William T. Trotter

- Applied Discrete Structures - Alan Doerr and Kenneth Levasseur

- Basic Algebra - Anthony W. Knapp (PDF)

- Basic Analysis: Introduction to Real Analysis - Jiří Lebl

- Basics of Algebra, Topology, and Differential Calculus (PDF)

- Bayesian Methods for Hackers - Cameron Davidson-Pilon

- Beginning and Intermediate Algebra - Tyler Wallace

- Book of Proof - Richard Hammack (PDF)

- Calculus - Gilbert Strang

- Calculus I - Jerrold E. Marsden and Alan Weinstein

- Calculus in Context - James Callahan

- Calculus Made Easy - Silvanus P. Thompson (PDF)

- Category Theory for the Sciences - David I. Spivak

- CK-12 Probability and Statistics - Advanced

- CLP-1 Differential Calculus - Joel Feldman, Andrew Rechnitzer, and Elyse Yeager

- CLP-2 Integral Calculus - Joel Feldman, Andrew Rechnitzer, and Elyse Yeager

- CLP-3 Multivariable Calculus - Joel Feldman, Andrew Rechnitzer, and Elyse Yeager

- CLP-4 Vector Calculus - Joel Feldman, Andrew Rechnitzer, and Elyse Yeager

- Collaborative Statistics

- Combinatorics Through Guided Discovery - Kenneth Bogart

- Complex Analysis - George Cain

- Computational and Inferential Thinking. The Foundations of Data Science

- Computational Geometry

- Computational Mathematics with SageMath - Paul Zimmermann et al.

- Concepts & Applications of Inferential Statistics

- Convex Optimization - Stephen Boyd and Lieven Vandenberghe

- Coordinate Geometry (1911) - Henry Buchard Fine and Henry Dallas Thompson (PDF)

- Differential Equations - Paul Dawkins (PDF, use download menu to download)

- Differential Equations (1922) - H. B. Phillips (PDF)

- Discrete Mathematics: An Open Introduction - Oscar Levin

- Discrete Mathematics: First and Second Course - Edward A. Bender and S. Gill Williamson

- Elementary Differential Equations - William F. Trench (PDF)

- Elementary Differential Equations (with Boundary Value Problems) - William F. Trench

- Elementary Number Theory: Primes, Congruences, and Secrets - William Stein

- Elementary Real Analysis - Brian S. Thomson, Judith B. Bruckner, Andrew M. Bruckner

- Elements of Abstract and Linear Algebra - E. H. Connell

- Elements of Differential and Integral Calculus (1911) - William Anthony Granville (PDF)

- Essentials of Metaheuristics - Sean Luke

- First Course in Algebra (1910) - Herbert E. Hawkes, William A. Luby, Frank C. Touton (PDF)

- Foundations of Combinatorics with Applications - Edward A. Bender and S. Gill Williamson

- Foundations of Constructive Probability Theory - Yuen-Kwok Chan (PDF)

- Geometry with an Introduction to Cosmic Topology - Michael P. Hitchman

- Graph Theory

- How We Got from There to Here: A Story of Real Analysis - Robert Rogers and Eugene Boman

- Introduction to Probability - Charles M. Grinstead and J. Laurie Snell

- Introduction to Probability and Statistics Spring 2014

- Introduction to Proofs - Jim Hefferon

- Introduction to Real Analysis - William F. Trench

- Introduction to Statistical Thought - Michael Lavine

- Introductory Statistics for the Life and Biomedical Sciences - Julie Vu and David Harrington

- Kalman and Bayesian Filters in Python

- Knapsack Problems - Algorithms and Computer Implementations - Silvano Martello and Paolo Toth

- Lecture Notes of Linear Algebra - Dr. P. Shunmugaraj, IIT Kanpur (PDF)

- Lies, Damned Lies, or Statistics: How to Tell the Truth with Statistics - Jonathan A. Poritz (PDF)

- Linear Algebra - Dr. Arbind K Lal, Sukant Pati (PDF) (:construction: in process)

- Linear Algebra (PDF)

- Linear Algebra - Jim Hefferon

- Linear Algebra Done Wrong - Sergei Treil

- Linear Algebra, Infinite Dimensions, and Maple - James Herod

- Linear Methods of Applied Mathematics - Evans M. Harrell II and James V. Herod

- Magic Squares and Cubes (1917) - W. S. Anderson (PDF)

- Math in Society - David Lippman

- Mathematical Analysis I - Elias Zakon

- Mathematical Discovery - Andrew M. Bruckner, Brian S. Thomson, and Judith B. Bruckner

- Mathematical Logic - an Introduction (PDF)

- Mathematical Reasoning: Writing and Proof - Ted Sundstrom

- Mathematics, MTH101A - P. Shunmugaraj, IIT Kanpur

- Modern Statistics for Modern Biology - Susan Holmes and Wolfgang Huber

- Multivariable Calculus - George Cain and James Herod

- Non-Uniform Random Variate Generation - Luc Devroye (PDF)

- Notes on Diffy Qs - Jiří Lebl

- Number Theory - Holden Lee MIT

- Number Theory: In Context and Interactive - Karl-Dieter Crisman

- Odds and Ends: Introducing Probability & Decision with a Visual Emphasis - Jonathan Weisberg

- Online Statistics Education - David Lane

- OpenIntro Statistics - David M. Diez, Christopher D. Barr, Mine Çetinkaya-Rundel

- ORCCA: Open Resources for Community College Algebra - Portland Community College

- Ordinary Differential Equations - Wikibooks

- Plane Geometry (1913) - George Wentworth and David Eugene Smith (PDF)

- Planes and Spherical Trigonometry (1915) - George Wentworth and David Eugene Smith (PDF)

- Precalculus - Carl Stitz and Jeff Zeager (PDF)

- Probability and Statistics Cookbook

- Probability and Statistics EBook

- Probability: Lectures and Labs - Mark Huber

- Recreations in Math - H. E. Licks (PDF)

- Sage for Undergraduates - Gregory Bard

- Second Course in Algebra - Herbert E. Hawkes, William A. Luby, Frank C. Touton (PDF)

- Seven Sketches in Compositionality: An Invitation to Applied Category Theory - Brendan Fong and David I. Spivak (PDF)

- Statistical Thinking for the 21st Century - Russell A. Poldrack

- Statistics Done Wrong - Alex Reinhart

- SticiGui - Philip Stark

- Tea Time Numerical Analysis - Leon Q. Brin

- The Joy of Cryptography (2020) - Mike Rosulek (PDF) (:construction: in process)

- Think Bayes: Bayesian Statistics Made Simple - Allen B. Downey

- Think Stats: Probability and Statistics for Programmers - Allen B. Downey (using Python)

- Vector Calculus - Michael Corral

- Yet Another Introductory Number Theory Textbook - Jonathan A. Poritz (PDF)

Mathematics For Computer Science

- A Mathematical Theory of Communication - Claude E.Shannon

- Discrete Structures for Computer Science: Counting, Recursion, and Probability - Michiel Smid

Misc

- 10 Keys to Great Landing Pages - iThemes Media (PDF)

- 2016 European Software Development Salary Survey

- 2016 Software Development Salary Survey

- 2D Game Development: From Zero To Hero (:construction: in process)

- 97 Things Every Programmer Should Know - Extended (Leanpub account or valid email requested)

- A MACHINE MADE THIS BOOK ten sketches of computer science - JOHN WHITINGTON (PDF)

- Ansible Up & Running (first three chapters) (account required)

- Asterisk™: The Definitive Guide

- Barcode Overview (PDF)

- Bitcoin and Cryptocurrency Technologies - Arvind Narayanan, Joseph Bonneau, Edward Felten, Andrew Miller, Steven Goldfeder (PDF)

- Coding With Minecraft - Al Sweigart

- Come, Let's Play: Scenario-Based Programming Using Live Sequence Charts

- Communicating Sequential Processes - Tony Hoare (PDF)

- Confessions of an Unintentional CTO: Lessons in Growing a Web App - Jack Kinsella

- Culture & Empire: Digital Revolution (PDF)

- Design With FontForge

- Designing Interfaces - Jennifer Tidwell

- DevDocs - Documents for Developers in 1 place

- DevOps For Dummies (IBM Edition) (PDF)

- Digital Signal Processing For Communications

- Digital Signal Processing For Engineers and Scientists

- Digital Signal Processing in Python - Allen B. Downey

- "DYNAMIC LINKED LIBRARIES": Paradigms of the GPL license in contemporary software - Luis A. Enríquez

- Essential Image Optimization - Addy Osmani

- Foundations of Programming (PDF)

- Game AI Pro - Steve Rabin

- Game Programming Patterns - Bob Nystrom

- Getting Real

- Google Maps API Succinctly - Mark Lewin

- Hacknot: Essays on Software Development - Ed Johnson

- Hello SDL - Lazy Foo' Productions

- High-Performance Scientific Computing (class lectures and slides)

- HoloLens Succinctly - Lars Klint

- How Computers Work - R. Young

- How to Become a Programmer (PDF)

- How to Think Like a Computer Scientist - Peter Wentworth, Jeffrey Elkner, Allen B. Downey, and Chris Meyers

- Image Processing in C: Analyzing and Enhancing Digital Images - Dwayne Phillips

- Information Technology and the Networked Economy (PDF)

- Introduction to Scientific Programming in C++ and Fortran - Victor Eijkhout (PDF)

- IRPF90 Fortran code generator - Anthony Scemama

- Learn Programming - Antti Salonen

- Learn to Program

- Learning 30 Technologies in 30 Days: A Developer Challenge - Shekhar Gulati

- Linked Data Patterns: A pattern catalogue for modelling, publishing, and consuming Linked Data - Leigh Dodds, Ian Davis

- Magic Ink: Information Software and The Graphical Interface - Bret Victor

- Mastering Bitcoin - Unlocking digital currencies - Andreas M. Antonopoulos

- Mobile Developer's Guide to the Galaxy (Leanpub account or valid email requested)

- Modeling Reactive Systems with Statecharts

- MSIX Succinctly - Matteo Pagani

- Networks, Crowds, and Markets: Reasoning About a Highly Connected World

- Object-Oriented Reengineering Patterns - Serge Demeyer, Stéphane Ducasse and Oscar Nierstrasz

- Open Government; Collaboration, Transparency, and Participation in Practice - Daniel Lathrop, Laurel Ruma

- PDQ: Pretty Darn Quick: An Agile, All-Purpose Methodology - Jeff Franz-Lien (Leanpub account or valid email requested)

- Philosophy of Computer Science (PDF)

- Playtime with Hyperledger Composer - Shubham Chadokar (PDF)

- PNG: The Definitive Guide

- Pointers And Memory (PDF)

- Principles of Computer System Design (PDF)

- Procedural Content Generation in Games - Noor Shaker, Julian Togelius, Mark Nelson

- Programming with Unicode

- Real-World Maintainable Software - Abraham Marin-Perez

- Record-Playback Test Automation: Sahi & Selenium IDE: Critical Evaluation of Record-Playback Automation Tools - Shashikant Jagtap (Leanpub account or valid email requested)

- Scientific Programming and Computer Architecture - Divakar Viswanath

- Signal Computing: Digital Signals in the Software Domain - Michael Stiber, Bilin Zhang Stiber, Eric C. Larson (PDF)

- Small Memory Software

- Web Almanac (PDF)

- Writing Native Mobile Apps in a Functional Language Succinctly - Vassili Kaplan

MOOC

Networking

- An Introduction to Computer Networks (HTML, PDF, Kindle)

- Bits, Signals, and Packets: An Introduction to Digital Communications and Networks

- Code Connected vol.1 (PDF) (book on ZeroMQ)

- Computer Networking : Principles, Protocols and Practice (HTML, ePub, PDF, Kindle)

- Computer Networks: A Systems Approach - Larry Peterson and Bruce Davie (HTML, epub, mobi, PDF)

- Distributed systems for fun and profit

- High-Performance Browser Networking - Ilya Grigorik

- How HTTPS Works - DNSimple

- HTTP Succinctly, Syncfusion (PDF, Kindle) (email address requested, not required)

- HTTP2 Explained - Daniel Stenberg

- Introduction to HTTP - Launch School

- IPv6 for IPv4 Experts - Yar Tikhiy (PDF)

- Kafka, The definitive Guide - Neha Narkhede (PDF)

- Network Science - Albert-Laszló Barabási

- The TCP/IP Guide

- Understanding IP Addressing: Everything you ever wanted to know (PDF)

- ZeroMQ Guide

Open Source Ecosystem

- Data Journalism Handbook

- Free as in Freedom: Richard Stallman and the free software revolution - Sam Williams (PDF)

- Free for All - Peter Wayner

- Free Software, Free Society: Selected Essays of Richard M. Stallman

- Getting Started with InnerSource (email address requested, not required)

- Getting started with Open source development (PDF)

- GitLab Handbook

- How to get started with open source (ePub & ODT)

- Innovation Happens Elsewhere - Ron Goldman, Richard P. Gabriel

- Introduction to Networking - Charles Severance (PDF)

- Open Advice: FOSS: What We Wish We Had Known When We Started

- Open source in Brazil - Andy Oram

- Producing Open Source Software - Karl Fogel

- The Architecture of Open Source Applications: Vol. 1: Elegance, Evolution, and a Few Fearless Hacks; Vol. 2: Structure, Scale, and a Few More Feerless Hacks

- The Art of Community - Jono Bacon (PDF)

- The Cathedral and the Bazaar - Eric S. Raymond

- The Future of the Internet - Jonathan Zittrain

- The Open Source Way

- The Wealth of Networks: How Social Production Transforms Markets and Freedom - Yochai Benkler

Operating Systems

- A short introduction to operating systems (2001) - Mark Burgess (PDF)

- Computer Science from the Bottom Up

- How to Make a Computer Operating System (:construction: in process)

- How to write a simple operating system in assembly language - Mike Saunders (HTML)

- Operating Systems and Middleware (PDF and LaTeX)

- Operating Systems: Three Easy Pieces (PDF)

- Practical File System Design: The Be File System - Dominic Giampaolo (PDF)

- Project Oberon: The Design of an Operating System, a Compiler, and a Computer - Niklaus Wirth & Jürg Gutknecht (PDF)

- The Art of Unix Programming - Eric S. Raymond

- The Design and Implementation of the Anykernel and Rump Kernels - Antti Kantee

- The little book about OS development - Erik Helin, Adam Renberg

- The Little Book of Semaphores - Allen B. Downey

- Think OS: A Brief Introduction to Operating Systems - Allen B. Downey (PDF)

- UNIX Application and System Programming, lecture notes - Prof. Stewart Weiss (PDF)

- Writing a Simple Operating System from Scratch - Nick Blundell (PDF)

- Xv6, a simple Unix-like teaching operating system

Parallel Programming

- High Performance Computing - Charles Severance & Kevin Dowd (PDF, ePUB)

- High Performance Computing Training (LLNL materials)

- High-Performance Scientific Computing (class lectures and slides)

- Introduction to High-Performance Scientific Computing - Victor Eijkhout

- Introduction to Parallel Computing - Blaise Barney

- Is Parallel Programming Hard, And, If So, What Can You Do About It? - Paul E. McKenney

- Programming on Parallel Machines; GPU, Multicore, Clusters and More - Norm Matloff Kerridge (PDF) (email address requested, not required)

- The OpenCL Programming Book

- Using Concurrency and Parallelism Effectively – I - Jon Kerridge (PDF) (email address requested, not required)

- Using Concurrency and Parallelism Effectively – II - Jon

Partial Evaluation

- Partial Evaluation and Automatic Program Generation - (PDF) Jones, Gomard and Sestoft

Professional Development

- Clean Code Developer: An initiative for more professionalism in software development (:construction: in process)

- Confessions of an IT Manager - Phil Factor (PDF)

- Don't Just Roll the Dice - Neil Davidson (PDF)

- How to Do What You Love & Earn What You’re Worth as a Programmer - Reginald Braithwaite

- Professional Software Development For Students - Mike G. Miller

- What I've Learned From Failure - Reginald Braithwaite

Programming Paradigms

- Flow based Programming - J Paul Morrison

- Introduction to Functional Programming - J. Harrison

- Making Sense of Stream Processing - Martin Kleppmann (PDF)

- Mostly Adequate Guide to Functional Programming - Mostly Adequate Core Team

- The Pure Function Pipeline Data Flow v3.0 ---- the Grand Unified Programming Theory - Lin Pengcheng

- Type Theory and Functional Programming

Regular Expressions

- JavaScript RegExp - Sundeep Agarwal

- Python re(gex)? - Sundeep Agarwal

- Regular Expressions for Regular Folk - Shreyas Minocha

- RexEgg

- Ruby Regexp - Sundeep Agarwal

- The 30 Minute Regex Tutorial - Jim Hollenhorst

- The Bastards Book of Regular Expressions: Finding Patterns in Everyday Text - Dan Nguyen (Leanpub account or valid email requested)

Reverse Engineering

- BIOS Disassembly Ninjutsu Uncovered 1st Edition - Darmawan Salihun (PDF)

- Hacking the Xbox: An Introduction to Reverse Engineering - Andrew "bunnie" Huang

- iOS App Reverse Engineering (PDF)

- Reverse Engineering for Beginners - Dennis Yurichev (PDF)

Security

- A Graduate Course in Applied Cryptography

- Crypto 101 - Crypto for everyone

- CryptoParty Handbook

- Handbook of Applied Cryptography

- How HTTPS works - dnsimple

- How to deal with Passwords

- Intrusion Detection Systems with Snort (PDF)

- OpenSSL Cookbook

- OWASP Mobile Security Testing Guide - Bernhard Mueller et al.

- OWASP Testing Guide 4.0 (PDF)

- OWASP Top 10 for .NET Developers

- Security Engineering

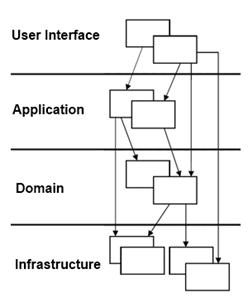

Software Architecture

- Agile Planning: From Ideas to Story Cards - Launch School

- Architectural Styles and the Design of Network-based Software Architectures - Roy Thomas Fielding

- Best Kept Secrets of Peer Code Review

- Building Secure & Reliable Systems - Heather Adkins, Betsy Beyer, Paul Blankinship, Piotr Lewandowski, Ana Oprea & Adam Stubblefield (PDF)

- Data-Oriented Design

- Developing Reactive Microservices (email address requested, not required)

- Domain Driven Design Quickly

- DSL Engineering: Designing, Implementing and Using Domain-Specific Languages - Markus Voelter

- Exploring CQRS and Event Sourcing - (EPUB, PDF)

- Guide to the Software Engineering Body of Knowledge (email adddress requested)

- How to Design Programs

- How to Write Unmaintainable Code

- Kanban and Scrum - making the most of both

- Kanban for skeptics - Nick Oostvogels (Leanpub account or valid email requested)

- Microservices AntiPatterns and Pitfalls (PDF)

- Microservices vs. Service-Oriented Architecture (PDF)

- Migrating to Cloud-Native Application Architectures (email address requested) (PDF)

- Naked objects - Richard Pawson (PDF)

- OAuth - The Big Picture (email address requested)

- Object-Oriented Reengineering Patterns - S. Demeyer, S. Ducasse and O. Nierstrasz

- Practicing Domain-Driven Design - Part 1 - Scott Millett (Leanpub account or valid email requested)

- Reactive Microservices Architecture (email address requested)

- Reactive Microsystems: The Evolution of Microservices at Scale (email address requested)

- Scrum and XP from the Trenches

- Serverless apps: Architecture, patterns, and Azure implementation

- Serverless Design Patterns and Best Practices - Brian Zambrano (Packt account required)

- Shape Up - Stop Running in Circles and Ship Work that Matters - Ryan Singer (PDF)

- Site Reliability Engineering

- Software Architecture Patterns (email address requested, not required)

- Software Engineering for Internet Applications

- Source Making Design Patterns and UML

- Test Driven Development, Extensive Tutorial - Grzegorz Gałęzowski

- The Site Reliability Workbook - Betsy Beyer, Niall Richard Murphy, David K. Rensin, Kent Kawahara and Stephen Thorne

- Web API Design - Brian Mulloy (PDF)

- Working with Web APIs - Launch School

- Your API Is Bad - Paddy Foran

Standards

Theoretical Computer Science

- Building Blocks for Theoretical Computer Science - Margaret M. Fleck

- Category Theory for Computing Science (PDF)

- Category Theory for Programmers - Bartosz Milewski (PDF)

- Design of a Programmer - Prakash Hegade (PDF)

- Homotopy Type Theory: Univalent Foundations of Mathematics (PDF)

- Introduction to Computer Science - Prof. Subhashis Banerjee, IIT Delhi

- Introduction to Computing

- Introduction to Theory of Computation - Anil Maheshwari and Michiel Smid (PDF)

- Models of Computation - John E. Savage

- Practical Foundations for Programming Languages, Preview - Robert Harper (PDF)

- Principles of Programming Languages - Scott F. Smith

- Programming and Programming Languages - Shriram Krishnamurthi

- Programming in Martin-Löf's Type Theory - Bengt Nordstroem

- Programming Languages: Application and Interpretation (2nd Edition) - Shriram Krishnamurthi

- Programming Languages: Theory and Practice - Robert Harper (PDF)

- Semantics with Applications: A Formal Introduction - Hanne Riis Nielson, Flemming Nielson (PDF)

- Structure and Interpretation of Computer Programs

- Structure and Interpretation of Computer Programs

- The Black Art of Programming - Mark McIlroy

- The Craft of Programming - John C. Reynolds

- Think Complexity - - Allen B. Downey (2nd Edition) (PDF, HTML)

Web Performance

- Book of Speed - Stoyan Stefanov

- Designing for Performance - Lara Hogan

- High Performance Accelerated Websites - Anshul (HTML) (:construction: in process)

- High Performance Browser Networking - Ilya Grigorik

- Mature Optimization - Carlos Bueno (PDF)

ABAP

Ada

- A Guide to Ada for C and C++ Programmers (PDF)

- Ada Distilled (PDF)

- Ada for the C++ or Java Developer - Quentin Ochem (PDF)

- Ada Programming - Wikibooks

- Ada Reference Manual - ISO/IEC 8652:2012(E) Language and Standard Libraries (PDF)

- Introduction To Ada

- Introduction To SPARK

- The Big Online Book of Linux Ada Programming

Agda

- Agda Tutorial

- Programming Language Foundations in Agda - Philip Wadler and Wen Kokke

Alef

Android

- Android 4 App Development Essentials

- Android Notes for Professionals - Compiled from StackOverflow Documentation (PDF)

- Android Programming Succinctly, Syncfusion (PDF, Kindle) (email address requested, not required)

- Android Tutorial - Tutorials Point

- Codelabs for Advanced Android Development

- CodePath Android Cliffnotes

- Coreservlets Android Programming Tutorial

- Expert Android and Eclipse development knowledge

- Google Android Developer Training

- Styling Android

- The Busy Coder's Guide to Android Development (PDF - older versions)

APL

- A Practical Introduction to APL1 & APL2 - Graeme Donald Robertson (PDF)

- A Practical Introduction to APL3 & APL4 - Graeme Donald Robertson (PDF)

- Mastering Dyalog APL (PDF)

Arduino

- Arduino Programming Notebook - Brian Evans

- Arduino Tips, Tricks, and Techniques - lady ada (PDF)

- Getting started with Arduino – A Beginner’s Guide - Brad Kendall (PDF)

- Getting Started with Arduino products - Official Arduino Documentation (:construction: in process)

- Introduction to Arduino

- Introduction to Arduino : A piece of cake! - Alan G. Smith

- Open softwear - Fashionable prototyping and wearable computing using the Arduino - Tony Olsson, David Gaetano, Jonas Odhner, Samson Wiklund (PDF)

ASP.NET

- Architecting Modern Web Applications with ASP.NET Core and Microsoft Azure (2020) - Steve "ardalis" Smith (PDF) (:construction: in process)

- ASP.NET Core 2 Succinctly - Simone Chiaretta, Ugo Lattanzi

- ASP.NET MVC Music Store

- ASP.NET WebHooks Succinctly - Gaurav Arora

- ASP.NET with C# (2008) - Vijay Mukhi, Sonal Mukhi, Neha Kotecha

- Diving into ASP.NET WebAPI (2016) - Akhil Mittal (PDF)

- Intro to ASPNET MVC 4 with Visual Studio 2011 Beta (2012) - Rick Anderson and Scott Hanselman (PDF)

- Introducing ASP.NET Web Pages 2 (2012) - Mike Pope (PDF)

- The Little ASP.NET Core Book (2018) - Nate Barbettini (PDF)

Assembly Language

- ARM Assembly Language Programming - Peter Knaggs (PDF) (:construction: in process)

- Assemblers And Loaders (1993) - David Salomon (PDF)

- Assembly Language Succinctly (PDF)

- PC Assembly Language - P. A. Carter

- Professional Assembly Language (PDF)

- Programming from the Ground Up - Jonathan Bartlett (PDF)

- Ralf Brown's Interrupt List

- Software optimization resources - A. Fog

- The Art of Assembly Language (2003) - Randall Hyde (PDF)

- Wizard Code, A View on Low-Level Programming - Tuomo Tuomo Venäläinen (PDF)

- x86-64 Assembly Language Programming with Ubuntu - Ed Jorgensen (PDF)

- x86 Assembly - Wikibooks

- x86 Disassembly - Wikibooks

- Zen of Assembly Language: Volume I, Knowledge (1990) - Michael Abrash

Non-X86

- Easy 6502 - Nick Morgan

- Machine Code for Beginners [Z80 and 6502 CPUs] - Lisa Watts and Mike Wharton (PDF) (Scroll to bottom and click on book cover)

- Machine Language for Beginners - Richard Mansfield [6502 CPU]

- Programmed Introduction to MIPS Assembly Language

- The Second Book of Machine Language

AutoHotkey

Autotools

Awk

- An Awk Primer - Wikibooks

- Awk - Bruce Barnett

- Gawk: Effective AWK Programming - Arnold D. Robbins (HTML, PDF)

Bash

- Advanced Bash-Scripting Guide - M. Cooper (HTML)

- Bash Guide for Beginners (2008) - M. Garrels (HTML)

- Bash Notes for Professionals - Compiled from StackOverflow documentation (PDF)

- BASH Programming (2000) - Mike G. (HTML)

- Bash Reference Manual (HTML)

- Bash tutorial - Anthony Scemama (PDF)

- BashGuide - Maarten Billemont (HTML) (PDF)

- Conquering the Command Line - Mark Bates (HTML)

- Getting Started with BASH (HTML)

- Google Shell Style Guide - Paul Armstrong (HTML)

- Introduction to Bash Scripting - Bobby Iliev (Markdown, PDF)

- Introduction to the Command Line - Launch School (HTML)

- Linux Shell Scripting Tutorial - A Beginner's Handbook (2002) - Vivek G. Gite (HTML)

- Linux Shell Scripting Tutorial (LSST) v2.0 - Vivek Gite (HTML)

- Slackbook (2005) - Alan Hicks, Chris Lumens, David Cantrell and Logan Johnson (HTML, DocBook, Postscript, PDF)

- The Bash Academy - Maarten Billemont (HTML)

- The Linux Command Line - William E. Shotts, Jr. (PDF)

- Writing Shell Scripts - William E. Shotts, Jr. (HTML)

Basic

- 10 PRINT CHR$(205.5+RND(1)); : GOTO 10 - Nick Montfort, Patsy Baudoin, John Bell, Ian Bogost, Jeremy Douglass, Mark C. Marino, Michael Mateas, Casey Reas, Mark Sample, Noah Vawter

- A beginner's guide to Gambas - John W. Rittinghouse (PDF)

- Pick/Basic: A Programmer's Guide - Jonathan E. Sisk

- Visual Basic Essentials

BETA

- MIA 90-02: BETA Compiler - Reference Manual - Mjølner Informatics

- MIA 94-26: BETA Language Introduction - Tutorial - Mjølner Informatics

- MIA 99-41: BETA Language Modifications - Reference Manual - Mjølner Informatics

- MIA 99-42: The Fragment System: Further Specification - Mjølner Informatics

- Object-Oriented Programming in the BETA Programming Language - Ole Lehrmann Madsen, Birger Møller-Pedersen, Kristen Nygaard

Blazor

- Blazor: A Beginner's Guide - Ed Charbeneau (PDF) (email address requested, not required)

- Blazor for ASP.NET Web Forms Developers - Daniel Roth, Jeff Fritz, Taylor Southwick (PDF)

C

- 256-Color VGA Programming in C - David Brackeen

- A Tutorial on Pointers and Arrays in C - Ted Jensen (PDF)

- An Introduction to C & GUI Programming - Simon Long (PDF)

- Bare-metal programming for ARM - Daniels Umanovskis (PDF)

- Beej's Guide to C Programming - B. Hall (HTML, PDF)

- Beej's Guide to Network Programming - Using Internet Sockets - B. Hall

- Beej's Guide to the GNU Debugger (GDB) - B. Hall (HTML)

- Build Your Own Lisp - Daniel Holden

- C Elements of Style - Steve Oualline

- C for Python Programmers - Carl Burch

- C Notes for Professionals - Compiled from StackOverflow Documentation (PDF)

- C Programming - Wikibooks

- C Programming Boot Camp - Paul Gribble

- Deep C

- Essential C - Nick Parlante (PDF)

- Everything you need to know about pointers in C - Peter Hosey

- Functional C (1997) - Pieter H. Hartel, Henk Muller (PDF)

- Hashing - Prakash Hegade

- Learn to Code With C - The MagPi Essentials (PDF)

- Learning GNU C - Ciaran O’Riordan (PDF)

- Modeling with Data - Ben Klemens (PDF)

- Modern C - Jens Gustedt (PDF)

- Object-Oriented Programming With ANSI-C (PDF)

- Programming in C - Bharat Kinariwala & Tep Dobry

- Programming in C - Kishori Mundargi

- Structures and C - Prakash Hegade

- The C book - Mike Banahan, Declan Brady and Mark Doran (PDF, HTML)

- The Craft of Text Editing or A Cookbook for an Emacs - Craig A. Finseth

- The Current C Programming Language Standard – ISO/IEC 9899:2018 (C17/C18), Draft - Open Standards Org - www.open-std.org (PDF)

- The GNU C Programming Tutorial - Mark Burgess, Ron Hale-Evans (PDF)

- The GNU C Reference Manual - Trevis Rothwell, James Youngman (HTML) (PDF)

- The little book about OS development - Erik Helin, Adam Renberg

- The New C Standard - An Economic and Cultural commentary (2009) - Derek M. Jones (PDF)

- TONC GBA Programming - Game Boy Advance Development

C Sharp

- C# Essentials

- C# Notes for Professionals - Compiled from StackOverflow documentation (PDF)

- C# Programming - Wikibooks

- C# Programming Yellow Book - Rob Miles (PDF) (2019)

- C# Smorgasbord - Filip Ekberg (HTML) (PDF, EPUB, MOBI) (2018)

- C# Tips - Jason Roberts (Leanpub account or valid email requested)

- Creating Mobile Apps with Xamarin.Forms C#

- Daily Design Patterns - Matthew P Jones (PDF)

- Data Structures and Algorithms with Object-Oriented Design Patterns in C# - Bruno Preiss

- Dissecting a C# Application - Christian Holm, Bernhard Spuida, Mike Kruger

- Fundamentals of Computer Programming with C# - Svetlin Nakov, Veselin Kolev & Co (HTML, PDF)

- High level asynchronous programming with Reactive Extensions - Emil Petro

- Introduction to Rx

- Learn C# in Y Minutes

- Mastering Xamarin UI Development, Second Edition - Steven F. Daniel (Packt account required)

- Object Oriented Programming using C# - Simon Kendal, Bookboon. (email address requested, not required)

- Threading in C#

- Xamarin.Forms for macOS Succinctly - Alessandro Del Sole

- Xamarin.Forms Succinctly - Alessandro Del Sole

C++

- C++ Annotations - Frank B. Brokken (HTML, PDF)

- C++ Core Guidelines - Editors: Bjarne Stroustrup, Herb Sutter

- C++ GUI Programming With Qt 3 - Jasmin Blanchette, Mark Summerfield (PDF)

- C++ Language

- C++ Notes for Professionals - Compiled from StackOverflow Documentation (PDF)

- C++ Succinctly, Syncfusion (PDF, Kindle) (email address requested, not required)

- C++ Tricks

- CS106X Programming Abstractions in C++

- Elements of Programming - Alexander Stepanov and Paul McJones - (PDF)

- Financial Numerical Recipes in C++ - Bernt Arne Ødegaard

- Game Programming Patterns

- Google's C++ Style Guide

- Hands-On System Programming with C++ - Dr. Rian Quinn (Packt account required)

- How to make an Operating System - Samy Pesse

- How To Think Like a Computer Scientist: C++ Version - Allen B. Downey

- Introduction to Design Patterns in C++ with Qt 4 - Alan Ezust, Paul Ezust (PDF)

- Joint Strike Fighter, C++ Coding Standards, by Bjarne Stroustrup (PDF)

- LearnCpp.com (HTML)

- Matters Computational: Ideas, Algorithms, Source Code, by Jorg Arndt (PDF)

- Open Data Structures (In C++) - Pat Morin (PDF)

- Programming Fundamentals - A Modular Structured Approach using C++ - Kenneth Leroy Busbee (PDF)

- Software Design Using C++ - Br. David Carlson and Br. Isidore Minerd

- Software optimization resources by Agner Fog

- The Boost C++ libraries - Boris Schäling (HTML)

- The Rook's Guide to C++ - Jeremy Hansen (PDF)

- The Ultimate Question of Programming, Refactoring, and Everything

- Think C++: How To Think Like a Computer Scientist - Allen B. Downey (PDF)

- Thinking in C++, Second Edition, Vol. 1. - Bruce Eckel (Vol. 2)

Chapel

Cilk

Clojure

- A Brief Beginner’s Guide To Clojure

- Clojure - Functional Programming for the JVM - R. Mark Volkmann

- Clojure by Example - Hirokuni Kim

- Clojure community-driven documentation

- Clojure Cookbook

- Clojure Distilled Beginner Guide

- Clojure for the Brave and True

- Clojure in Small Pieces - Rich Hickey (PDF)

- Clojure Koans

- Clojure Programming - Wikibooks

- ClojureScript Koans

- ClojureScript Unraveled (HTML)

- Data Sorcery with Clojure

- Modern cljs

- SICP Distilled - An idiosyncratic tour of SICP in Clojure

- The Clojure Style Guide

COBOL

- COBOL Programming Fundamental (PDF)

- Enterprise COBOL for z/OS documentation library

- Introduction to the COBOL Language

- OpenCOBOL 1.1 - Programmer's Guide (PDF)